Serverless technology is on the tip of everyone’s tongue, and cloud providers sure are pushing hard for its adoption. The question is, is it all overrated hype or is there something to actually gain from transitioning to this model? In this article we will briefly tackle a few points that will leave you with a clearer idea of Serverless’ potential now, and in the future.

Serverless purports itself to be a clear winner in many aspects of application development and deployment. We’ve chosen a selection of these to enable the comparison between this new technology and two more traditional approaches, namely using virtualisation (EC2s) and containerisation (Kubernetes) on the AWS platform. We’ll be comparing each technology based on:

- Cost

- Scalability

- Ease of use

- Sustainability

Good news for the bottom line – comparing costs

Cost is the aspect in which Serverless really shines in comparison to the alternatives. Serverless applications are proven to be a smaller financial burden than their counterparts in a multitude of cases. Before these can be demonstrated, one must first understand what is meant by the term “serverless”, and why it is a noun and not an adjective.

Because the Serverless approach is all about running code without having to manage your own hardware, we are essentially replacing, or better, abstracting, the idea of an “always-on” server with event driven processes. This way, cost can be drastically reduced in most applications that don’t require compute-intensive workloads or where extensive processing power is needed to manipulate huge amounts of data.

How? Because the main advantage of Serverless offerings is the Pay as You Go model, which only charges the owner for the memory, compute power or data that they effectively use. Essentially, anytime the application is resting, not being used, it is not costing the owner anything (unlike a Kubernetes cluster or EC2 instances). For example, AWS Lambda is priced by the number of invocations ($0.20/1M requests) and the cost of the computing power used during that time ($0.0000166667 for every GB-second). Running a 128MB Lambda for 100ms would therefore set you back $0.00000041 in the Irish region.

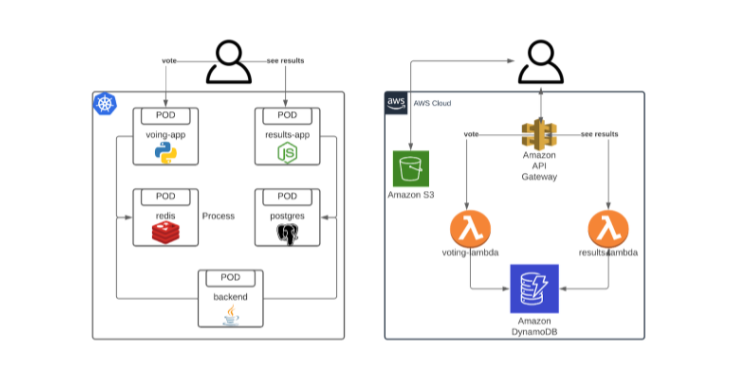

Take a simple web application, in this case a voting app. Traditionally, we would have a Kubernetes cluster running on EKS, managing pods that contain the different applications needed for the webapp to work, end-to-end. These pods, depending on their size, may or may not reside inside the same node. In any case, such a webapp would need a powerful EC2 instance to allow for 5 different applications to run continuously.

On the other hand, a Serverless implementation of the same exact webapp would feature components that do not run continuously, meaning only a fraction of the computational power is required. Furthermore, a serverless implementation would include components that are better optimised for running in cloud environments.

Imagine this application receives 50 sessions a day. Using the Cost Calculator developed by Xavier Lefèvre based on AWS’s official cost calculator, this sort of application would cost 0.50$ a month when developed using Serverless architecture, free tier included. This is minimal compared to the cost you would need to run the smallest type of EC2 instance for a whole month (t3a.nano at 3.43$, which still wouldn’t be sufficient to run the whole K8s cluster). Even before considering the costs for self-managed monitoring and scalability, you can already see the potential for even further cost saving at a larger scale.

Another advantage of serverless, is that it allows granular cost control over each feature and piece of architecture you deploy, allowing for more efficient FinOps.

But will it scale?

Scalability is another flagship feature of Serverless architecture, indeed living up to its expectations. Why? Well, let’s go back to the voting webapp example. In Kubernetes, when load increases and individual pods are under strain, more pods will automatically be created to match the demand (only if you have specified a Deployment/ReplicaSet, however). This means that new containers are spun up on new nodes, and more EC2 instances are launched, with all the overhead that comes with them.

Serverless components like Lambdas, on the other hand, allow for a large number of concurrent executions, meaning that there is no wait time for virtual machines to turn on and scaling is nearly instant. In Ireland, 3000 Lambdas will launch concurrently during a burst, and AWS will keep adding capacity for 500 more every minute. This, without any effort from you at all.

The key difference here is that while the developer does not have to concern themselves with scaling policies when following a Serverless approach (as it is entirely managed by the cloud provider), the same cannot be said when dealing with containers, where this automation has to be set up and configured beforehand.

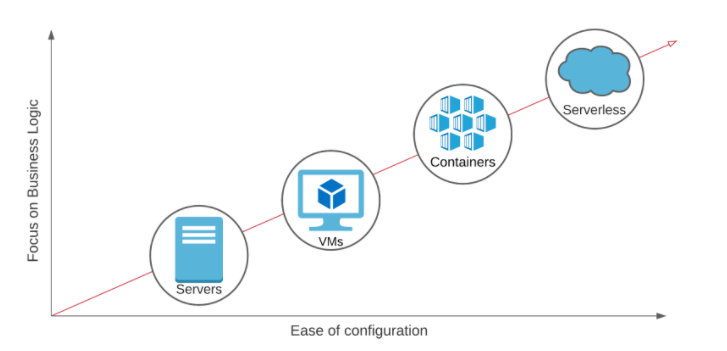

Considering ease of use

Serverless is particularly suited for use cases where time to market is important and the development team has no operational knowledge or has no time to deal with the underlying infrastructure. This means that developers can spend more time focusing on the application rather than deploying the infrastructure to support it.

With serverless, developers can build applications rapidly. Serverless components abstract away the infrastructure and hand it over to AWS to manage. This allows developers with little to no experience of AWS to be able to quickly start building, meaning they are free to focus on what’s important to them and do not have to worry about upgrading & patching servers.

Rapid development is also made easier with Lambda, which supports a diverse range of programming languages allowing for developers with a variety of skill sets to utilise the service.

Lambda, however, isn’t the only serverless alternative to reducing the time to market in a similar fashion. Fargate is another AWS offering that allows you to keep using Kubernetes on a pay as you go basis, without having to radically redevelop your application to fit a new architecture. This is a very popular option for legacy applications, as it’s much simpler to manage than a traditional EC2 instance or EKS cluster.

AWS handles the maintenance of the underlying infrastructure for Fargate meaning you don’t have to deal with any of the overhead of patching and updating. If there was a security vulnerability then EC2 users would need to manually patch this while Fargate users wouldn’t need to worry as this is left to AWS to fix. For this reason Fargate is seen as a more secure option.

Generally, there is no significant cost saving in using Fargate over EC2/EKS, however, in the right use case Fargate can offer great benefits. Fargate has a pay as you go model where you’re billed based on how many CPU cores and memory your workload uses per second, meaning you only pay for what you use. With EC2, users can under provision their instances where they don’t have enough instances for the demand of their application, or more commonly over provision their instances in which they over pay for the resources they’re utilising. Fargate, however, autoscales out of the box, removing this problem entirely (thus making it much easier to use).

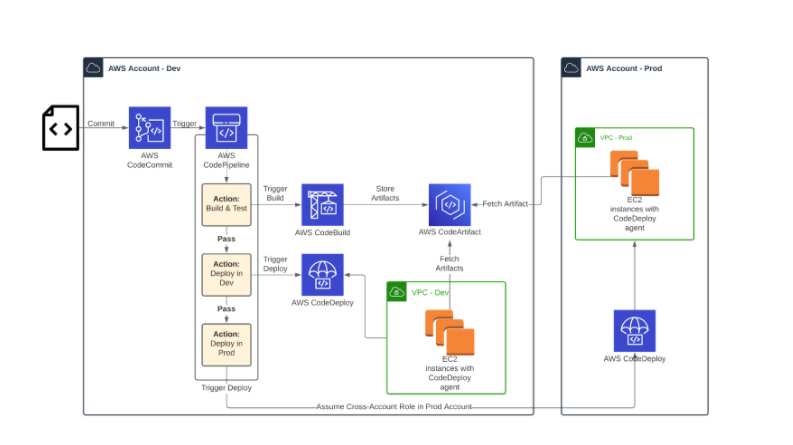

Visualisation of a multi-account CI/CD pipeline that only makes use of AWS Serverless components to deploy changes.

AWS also provides a suite of serverless developer tools to build a CICD workflow for your applications which in turn speeds up the time to market. These services include CodeCommit (source control), CodeBuild (continuous integration), CodeDeploy (deployment service), CodePipeline (continuous delivery), CodeArtifact (artifact repository). The advantage of these completely AWS managed services is that they require minimal setup compared to alternatives such as Jenkins and Gitlab. In the diagram above you can see how these services can be integrated together and what the architecture would look like.

SUSTAINABILITY

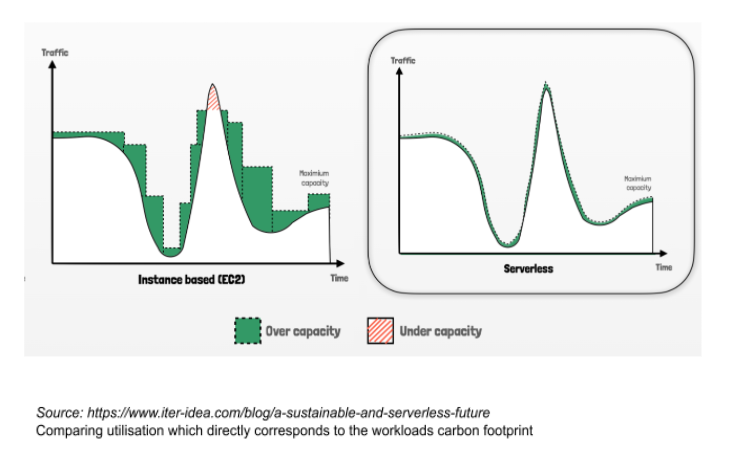

Sustainability is an important aspect that you should keep in mind when designing solutions as sustainability in the cloud is the user’s responsibility. Running an application in a serverless architecture can be an efficient way to lower your workload’s carbon footprint.

Let’s do a comparison of how the carbon footprint varies between an application using EC2 vs Lambda. If you were to run an application on an EC2 instance there might be periods where the instance is sitting idle. This means that the instance is under utilised and wasteful in terms of energy.

Running this via lambda is more energy efficient. When a lambda is triggered it runs within a microVM on an AWS managed EC2 instance. When the lambda finishes running the microVM is replaced with another so that someone else’s lambda can run. This approach ensures higher utilisation of existing resources as opposed to utilising a fraction of new resources.

So is it time to go serverless?

Having compared and contrasted architecture solutions, it’s true to say that Serverless does not fit all use cases and containerisation remains the best option for certain applications.

Indeed, there are plenty of cases where Serverless is not the correct solution to a problem and can end up costing more than the older alternatives. Examples include applications where Java/JVM is used, long running tasks are needed or when building applications that require access to low level APIs (eg. thread control)

Furthermore, regulatory restrictions might prevent certain companies (such as ones providing financial services or governmental organisations) from being able to use software and hardware shared between multiple customers, and would be better off using dedicated hosts if they still want to make use of the cloud. Serverless applications are generally not available on dedicated hosts and would therefore be unsuitable for these use cases.

However, when Serverless is implemented properly it offers significant cost savings over traditional approaches, positioning itself not only as a valid alternative, but one that offers significant improvements on current technologies.

If you would like more information or advice on how serverless architecture could help you cut costs – and carbon contact us here.

Find out more about our partnership with AWS.