Within an application, database calls can represent a significant time cost. With the ElastiCache service, AWS offers solutions to streamline and optimize your access to databases, thereby boosting the performance of your applications.

AWS ElastiCache Service Overview

AWS ElastiCache is a fully-managed in-memory datastore service.

In-memory storage involves two essential characteristics:

- Rapidity: ElastiCache is fast, since we are talking about memory rather than disk access

- Limitation: the storage space is limited by the physical memory size

Like most database services, ElastiCache is primarily a regional service. AWS offers two compatibility modes with ElastiCache offering very distinct attributes: Memcached and Redis.

Whilst ElastiCache/Memcached offers hyperthreading support for high performance, it only offers simple data types (object cache) and fewer functionalities. This service can also only be deployed in one Availability Zone (AZ).

The other choice offered by ElastiCache is Redis, which boasts more features, such as support for multi-AZ and more complex data types, encryption, backups, etc. It also offers data sharding.

The ElastiCache/Redis service also has multiple certifications such as Payment Card Industry Data Security Standard (PCI DSS) and Health Insurance Portability and Accountability Ace (HIPAA) to protect payment and health data. On the other hand, it offers slightly lower performance than ElastiCache because it does not support hyperthreading.

Scenario presentation

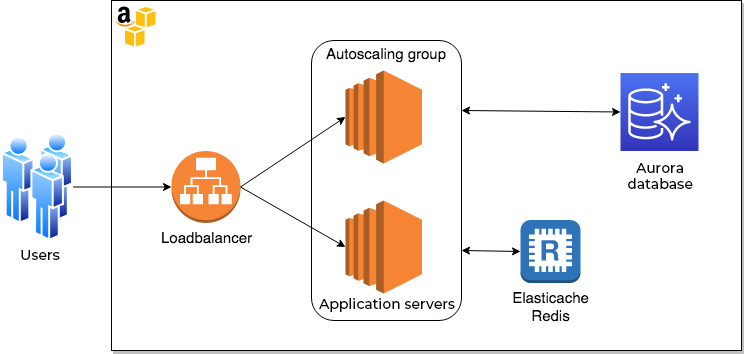

Let’s take the case of a CMS type web application which is expected to receive a lot of traffic.

We’ll imagine this application’s traffic is regional (whether that’s national or continental) and that a mono-region typology has been chosen. For security reasons, our specifications require data encryption across the entire application scope.

Choice of architecture

General principles

Based on the established specifications, we will choose Redis in order to benefit from ElastiCcache’s encryption features.

- An ElastiCache “instantiation” can have 1 to 15 node groups.

- Each of these nodes can have up to 5 read-only replicas.

- This means that there can be a maximum of 90 instances.

If we choose to have several nodes, we enter the “cluster” mode of operation (as ElastiCache Redis is called) with data sharding.

For the sake of simplicity, in this example, we will stick with a “cluster mode: disabled” use case (in ElastiCache terminology) or, as it’s more commonly referred to, “no sharding”. We will therefore only have one node, and a certain number of replicas.

As with Aurora-type services, replication is asynchronous: a replica may therefore lag behind its master. While the replicas can be distributed over several availability zones (AZ), the ElastiCache service does not offer autoscaling.

An interesting feature of ElastiCache is that it allows automatic failover in the event of node failure. And this option is enabled by default. However, a failover can still lead to a downtime of up to 3-6 minutes, even with automatic failover in a multi-AZ context enabled.

ElastiCache is configured through Parameter Groups, much like the RDS product.

Among the most important parameters for Redis, it will be necessary to consider :

| maxmemory | This is static configuration data imposed by AWS that indicates the maximum amount of memory that can be used to store cached objects. This value varies, depending on the type of instance selected |

| reserved-memory-percent | This is the percentage of memory used by ElastiCache for its operation. This part of the memory can’t be used for caching data |

| maxmemory-policy | This variable allows you to configure Redis’s behavior when the memory becomes full |

ElastiCache and security

Like most AWS services, ElastiCache can be protected by security groups. The service also supports at-rest and in-transit encryption. Enabling in-transit encryption can then allow the activation of Redis authentication mechanisms (REDIS AUTH).

Like Aurora, ElastiCache/Redis offers several endpoints:

- The “primary endpoint”, which points to the master of the Redis instantiation

- The “reader endpoint”, which is a load balancer allowing traffic to be distributed across all the replicas (except the master)

Note that if each node has its own endpoint, rather than use it, it is recommended to rely on the Redis instantiation endpoints .

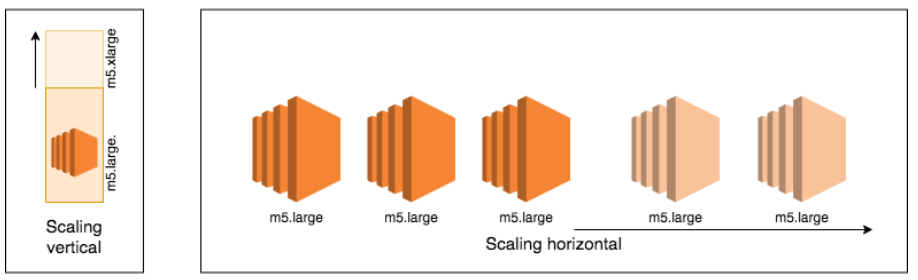

ElastiCache/Redis and scaling

ElastiCache allows horizontal and vertical scaling.

In the case of vertical scaling, the operation is performed with a “minimal” downtime. Note that within an ElastiCache/Redis instantiation, all nodes must be of the same type.

Horizontal scaling is also possible. This consists of adding and removing nodes within your instantiation. Of course, you must have at least one replica to be able to do Multi-AZ and benefit from automatic failover

Using a cache service

ElastiCache offers two strategies for adding objects to the datastore:

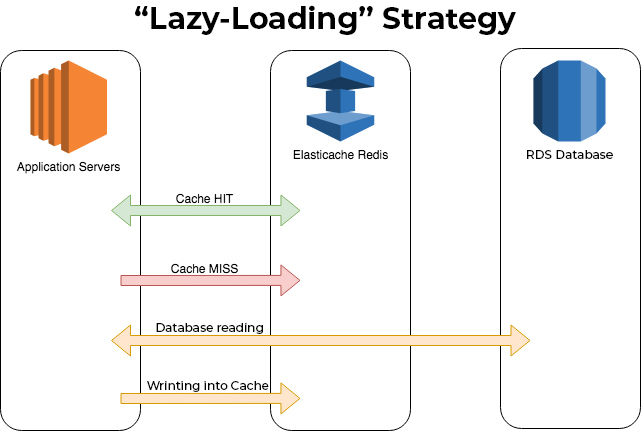

Lazy-Loading

In this type of use, when the application needs to retrieve data, it first queries the cache. If the object is available, ElastiCache returns the data directly. If it is not, the application queries the database and then writes the result to the ElastiCache datastore.

This is a reactive approach, which allows for a smaller datastore since only the requested objects are stored.

The downside of this method is that there is a performance “penalty” since there is a greater risk of the object not being cached and the database therefore needing to be queried.

Another problem with this approach is that the objects stored in the datastore can be quite old. It is therefore necessary to set an appropriate time to live (TTL) to avoid serving stale data to the application.

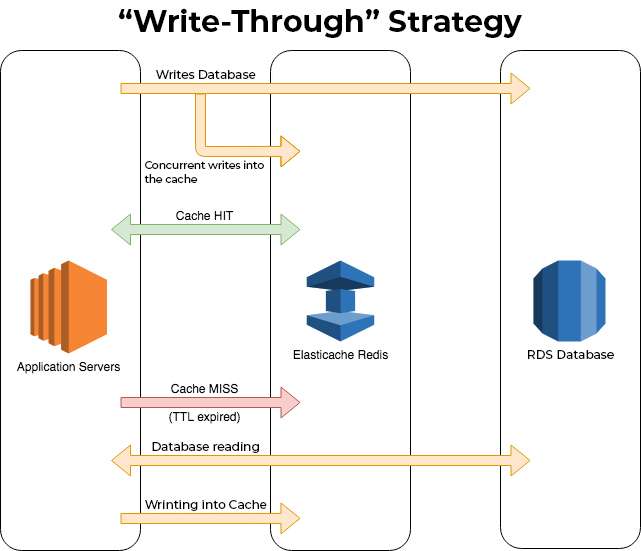

Write-through

The write-through mode is a bit different, since each time the application writes to the database, it will also write to the ElastiCache datastore. This is a proactive approach, and guarantees that the data stored in the datastore will always be up to date.

The downside is that the datastore size will be larger, and it is likely that some of the data stored in the datastore will never actually be accessed.

The impact of this problem can be reduced by once again setting a suitable TTL, which allows unused objects to be removed and helps reduce the size of the datastore.

Deleting objects from the datastore

An object can be deleted from the datastore under one of the following circumstances:

| The object’s TTL expires | The object can then be reclaimed by Redis.The TTL of an object is generally defined as the time the object is deposited in the datastore |

| The datastore is full | Redis then proceeds to an eviction, in accordance with the configured policy.Rather than listing the full specs here, you can find all the detailed technical information about Redis’s eviction policies in this document.Note that ElastiCcache’s default eviction policy is volatile-lru, which means that Redis will remove the files that are both “least recently used” files and have a completed TTL field. |

| A deletion order has been placed in Redis | You can manually delete an object using the command line interface (CLI) provided by the Redis editor, or through the Redis API (used, for example, via a library within your application) |

A final word on TTL

With Redis, there is no “default TTL” that would be applied to an object dropped in the datastore. So applications must explicitly define the TTL when they drop an object. It is, however, possible to define (or modify) the TTL after examining the facts using Redis commands, such as EXPIRE or EXPIREAT.

Redis’s TTL command lets you know the current TTL of an object. The PERSIST command allows you to remove the TTL from an object in the datastore.

Service management & operations

One of the particularities of the ElastiCache service is that it does not offer logs, so you won’t find any in Cloudwatch. However, AWS does publish a large number of data points to CloudWatch Metrics. These metrics concern both the instance (“host-level metrics”) and the Redis application itself.

You can therefore find numerous technical indicators, such as:

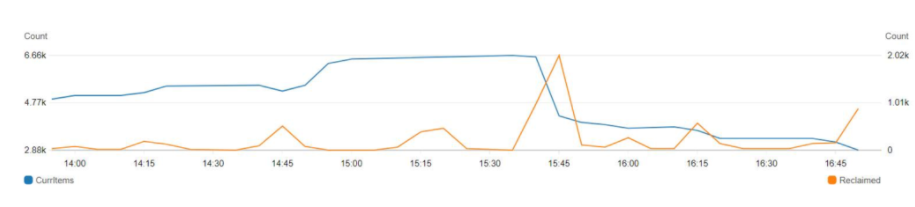

- The number of items currently stored in the datastore

- The number of evictions performed by Redis

- The number of reclaimed objects (expired TTL) deleted by Redis

- The number of GET/SET commands executed by Redis, as well as their latency

At 3.45 pm, we observe an important deletion of “reclaimed” objects (orange curve): half of the objects have been deleted, because their TTL has expired

For more details, please refer to the following AWS documents:

Finally, AWS enables you to create a dedicated events system via ElastiCache, allowing you to set up notifications or other forms of processing on events such as:

- Failover

- Scaling

- Node reboot

- Node replacement

A Cloud case study: improved performance

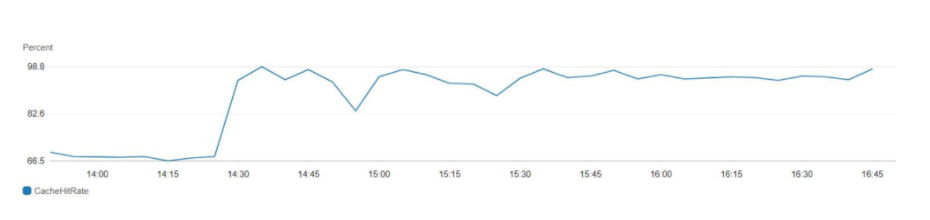

Following the implementation of ElastiCache/Redis for one of our clients, we have seen the following performance improvements:

- Between 50% and 70% of requests are served by ElastiCache rather than by the database

- The response time of ElastiCache is 5 to 10 times faster than the relational Database Management System (DBMS) previously relied on

Financial considerations

The following uses the scenario of an r5.large instance in the eu-west-1 region:

| Instance | Hourly cost On Demand | Monthly cost On Demand | Savings | Monthly cost 1 year reservation | Savings | |

| ElastiCache | cache.m5.large | 0.241$ | 173.52$/mois | – | 119.72$/mois | – |

| RDS/MySQL | db.r5.large | 0.265$ | 190.80$/mois | 9% | 127.97$/mois | 7% |

| RDS/MSSQL standard mono-AZ | db.r5.large | 1.025$ | 738$/mois | 76% | 707.078$/mois | 83% |

| RDS/Oracle | db.r5.large | 0,536$ | 385,92$/mois | 48% | 254,697$/mois | 53% |

| Aurora | db.r5.large | 0,32$ | 230.40$/mois | 25% | 153.30$/mois | 22% |

As we can see, an ElastiCache instance is about 10% cheaper than an RDS/MySQL instance, and 25% cheaper than an Aurora instance.

The gap widens with licensed DBMSs, since in a single-AZ and single-instance context, ElastiCache is about 50% cheaper than Oracle and 75% cheaper than MS SQL Server. This clearly demonstrates how the ElastiCache service is a powerful, efficient and comparatively inexpensive service.

Of course a like-for-like comparison is not quite that simple, since ElastiCache is not capable of replacing your relational DBMS. However, for a relatively modest cost, it can relieve and downsize your database instances, and therefore help you achieve significant cost savings.

Go further with ElastiCache

It is possible to use other Redis features, such as “append on file”. This isn’t something we’ve discussed in this article, as it wasn’t very relevant to the use case we looked at. If you don’t have encryption requirements and need greater performance, one could opt for ElastiCache/Memcache rather than Redis.

Other Redis usage patterns are available to cater for larger needs, such as using the “cluster mode” offered by ElastiCache which allows users to benefit from sharding, alongside other features.

ElastiCache can also be used for purposes other than object caching in a web context. For example, Redis Sorted Sets can be used to store, maintain and create a leaderboard ranking (eg: for an online game) with great simplicity and speed.

Taking another example: Redis’s capabilities around geospatial data mean it could help simplify the tracking of a vehicle fleet, be used in an IOT context, or add value as part of a recommendation engine.